This is a Node.js addon for OpenVINO GenAI LLM. Tested using TinyLLama chat 1.1 OpenVINO int4 model on Windows 11 (Intel Core i7 CPU).

Watch below YouTube video for demo :

- Visual Studio 2022 (C++)

- Node 20.11 or higher

- node-gyp

- Python 3.11

- Used openvino_genai_windows_2024.2.0.0_x86_64 release

Run the following commands to build:

npm install

node-gyp configure

node-gyp build

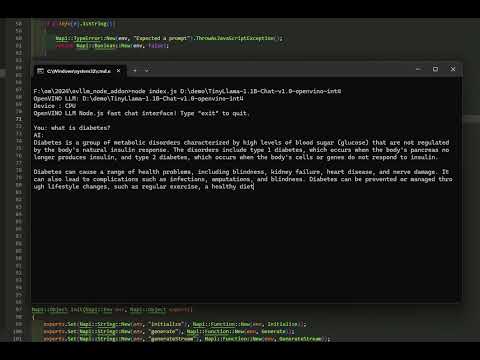

To test the Node.js OpenVINO LLM addon run the index.js script.

node index.js D:/demo/TinyLlama-1.1B-Chat-v1.0-openvino-int4

Disable streaming

node index.js D:/demo/TinyLlama-1.1B-Chat-v1.0-openvino-int4 nostream

Supported models are here